Consolidated Data Models

One of the things we strive to do at Tech Guys Who Get Marketing is ensure that clients are setup with the right infrastructure. The “right” tech infrastructure is the one that supports your business goals and objectives and doesn’t pigeonhole or handcuff you once the business exceeds X level of complexity or scale, but instead supports you through the growth without radically increasing tech overhead. The challenge with the “right” infrastructure is that sometimes its not easy for a non-techie to understand what the data infrastructure has to look like when you’re 40%, 50%, 80% of the way towards you big business goals. The thought process usually ends up with, “well this tool will work now, lets use it!”

That’s pretty much the as saying, “you know, this pistol works pretty well, lets go fight WWII with it.” Its not the right tool for the long term success you’re probably looking for. Now that pistol might get you decently far into the fight, but it surely can’t take out tanks or aircraft.

Okay- away from the war analogy, but I think the point is clear. Choosing the right technology for the end-game results is a wildly different process than choosing technology for the here and now. Here and now techs are great at patchwork and saving the day when you need it saved- but it doesn’t mean they have the foresight to plan for the end-game scale. In fact, if an individual tech becomes accustom to fire fighting day in and day out, because maybe you under-staff their team, he (she) will create that routine and non-intentionally be a poor big picture planner.

I want to share the way one must think in today’s world to plan for complexity and scale. The terms I use to describe the architecture that will get you where you want to go, is “Consolidate Data Model”.

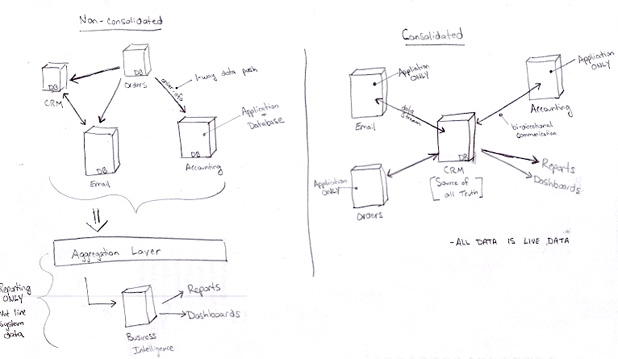

So we have two types of data models, non-consolidated and consolidated. The former being what has evolved out of the technology space since the beginning. The later being a logical approach that existed in the very beginning- pre-PC era, and is now coming back into mainstream with the advent of cloud computing and Software-As-A-Service (SaaS) tools.

- Pre-PC: Mainframes, UNIX/SCO, dumb terminals

- PC Era: Personal Computers, Windows/Mac, smart terminals

- Cloud Era: Cloud Servers, SaaS Applications, smart local terminals that act dumb

Some quick definitions. Dumb terminals are terminals connected to a computer that merely display / interact with the computer, but not computers themselves, they’re “dumb”. I’m personally most familar with SCO Unix environments whereby a business had lets say “8 dumb terminals” around the office that all interacted with 1 computer [server]. 1 brain powered all those 8 users. In the smart terminal arena, the terminals all become full blown computers with very capable processors. This allows for a far less powerful server and made the applications the computers ran much richer (color! whoohoo!) and faster.

Now the era of the cloud is upon us, and while network engineers have been building PC Networks with smart terminals for quite some time we’re seeing a fundamental shift in the way the data is organized within a network. The reasons behind the shift are that the “network” is now consisting of the entire Internet and the speeds available for applications to run over the Internet are finally here. 10-15+Mbps is common.

The diagrams below show the difference between the two models. The big difference is in defining a primary data tool that has integrated reporting and dashboarding, has a complete API / metadata API, and whose data layer full extensible (adding new objects, relationships, etc) as the source of all truth. When a single system is the source of all truth and data, all the applications connected to it become “dumb applications” that operate using the data from the single source. This is a shift from taking “partially integrated” smart systems and running through a data aggregation layer [Cast Iron Application type] and to a Business Intelligence server where reporting is then done.

What does this model shift net us? Well the list looks like this:

- Consolidated real-time reporting – all data at your finger times in a single system, the ‘daily news’ is availble quickly and efficiently

- Application Independence – with the data being consolidated each “application” you have talking to the primary data set is just a dumb application. If it needs replaced because a new application is better served, its far less painful to rip out the old and add in the new since the consolidated database has standards that define the view for data of that application type.

- Programming Costs – this model enforces LESS programming by requiring all applications leverage the same data structure which already has reporting / dashboarding woven into the fabric

That sums up the basics of a consolidated data model. There are a few companies out there that “get it” and are pushing this infrastructure. Salesforce.com is by far the leader and its AppExchange developer community is chock-full of drop in and go ‘dumb’ applications. NetSuite is in the game along with a few other competitors in the Enterprise application space. Salesforce.com is the only one that has by my count done a good job supporting the developer community is a big way, as they see the only way this type of model can exist is if developers have very open doors.